[1] Z.Zhang, B.D.Rao, Iterative Reweighted Algorithms for Sparse Signal Recovery with Temporally Correlated Source Vectors, ICASSP 2011. Downloaded here

[2] Z.Zhang, B.D.Rao, Exploiting Correlation in Sparse Signal Recovery Problems: Multiple Measurement Vectors, Block Sparsity, and Time-Varying Sparsity, ICML 2011 Workshop on Structured Sparsity: Learning and Inference. Downloaded here

(Thank Igor for highlighting this paper in his blog Nuit Blanche)[1] gave the iterative reweighted L2 version of T-SBL/T-MSBL. The most interesting thing is, this insight motivated us to modify most existing iterative reweighted L2 algorithms for better performance in the presence of temporally correlated source vectors. Yes, I mean "most existing iterative reweighted L2 algorithms". Although the paper only gave two examples, I indeed have modified other famous reweighted L2 algorithms, such as the Daubechies's algorithm.

[2] gave the iterative reweighted L1 version of T-SBL/T-MSBL (of course, there are other interesting stuff in [2]). And, similarly, this motivated us to modify existing iterative reweighted L1 algorithms, such as the group Lasso (note that group Lasso can be applied on the MMV model).

The key idea is (I have emphasized this many times in my blog): replacing the Lq norm (such as L2 norm, L_infinity norm) imposed on the rows of the solution matrix by the Mahalanobis distance measure, i.e.:

Of course, the matrix B needs to learn adaptively from the data. However, in some cases you can pre-define it before runing algorithms.

Here is an example (taken from [1]) showing how to modify the M-FOCUSS algorithm (a typical iterative reweighted L2 algorithm).

The original M-FOCUSS is given by:

The learning rule for the matrix B is similar to the one in T-MSBL. See [1] for details. Let's see what happen to the performance.

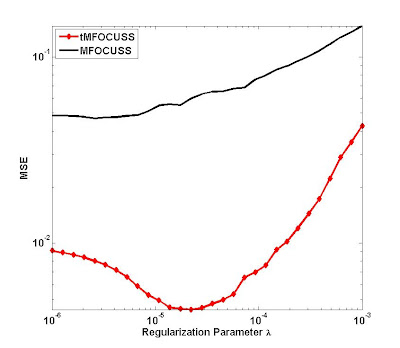

Here is a simulation: The Gaussian dictionary matrix was of the size 50 x 200. The number of nonzero rows in the solution matrix was 20. The temporal correlation of each nonzero row was 0.9. The number of measurement vectors was 4. Noise standard variance was 0.01. To avoid the disturbance of incorrect choosing the regularization parameter \lambda, we chosen 30 candidate values for \lambda, and for each value we ran the original MFOCUSS and the new tMFOCUSS for 300 trials. The averaged performance as a function of \lambda was given below:

See the improvement? Funny, ha!

The codes of tMFOCUSS and the the simulation can be downloaded from my website: https://sites.google.com/site/researchbyzhang/software

Other examples can be found in my paper [1].

Well, now let's go to the stuff on iterative reweighted L1 algorithms for the MMV model. The framework of reweighted L1 is given by:

where its weights are:

Now, we replace the Lq norm in the weights by the Mahalanobis distance measure, obtaining:

An noiseless simulation was carried out (see [2] for details). The temporal correlation of each row of the solution matrix was 0.9. The matrix B can be learned using the rule given in the paper. But here it was set to the true value. The result is shown below:

Not surprising, we see the improvement! But I have to say, in this case, we may have to solve a non-convex problem.

Interesting, right? Why not to modify your favorite reweighted L1 algorithms or L2 algorithms to improve their performance in the presence of temporally correlated source vectors? You should know, in practice, we often encounter the cases when the source vectors are correlated (In EEG/ERP source localization, the correlation could be larger than 0.95!).

But note, for some algorithms, improvement by the straightforward modification may be not obvious -- There is no free-lunch!

I just found the original post had some typos when discussing how to modify the iterative reweighted L1 algorithms. The modified part is not the penalty, but the weighting. Now I've corrected it.

ReplyDelete(PS: The modification in the paper [2] is correct. If you read that paper instead of reading this post, then you don't worry about getting wrong message)

Zhilin (September 5, 2011)

Respected Sir,,

ReplyDeleteI am Amit from India, doing M.tech project in application of compressive sensing for wireless senor networks. Currently I am working on M-FOCUSS algorithm.

I have implemented M-FOCUSS algorithm and its giving good results for one dimensional data. Now I want to extend it for images. But I am struggling when I tried to apply same for images. I tried two approaches.

1. I divide image into number of non-overlapping blocks of size 16*16. Converted each block into column vector and processed resulting column vector. But if I do so then blocking artifacts are visible in the reconstructed image.

2. I took one column of image at a time and processed corresponding column vector. If I do so still there are blocking artifacts.

Can you guide me to apply M-FOCUSS algorithm on images? If possible give demo MATLAB code. I am really struggling a lot. I appreciate your help.

Thanks in adavance.