Since my previous work focused on ICA with applications to ECG, I have strong interests in the compressed sensing applied to ECG telemonitoring via wireless body-area networks. This is a promising application of compressed sensing because the ECG signal is "believed" sparse and compressed sensing can save much power. Thus, I read dozens of papers on this emerging application. But I'd to say, I am totally confused by current works on this direction. My main confusion is that there is few work seriously considering the noise.

You may ask: where is the noise? Let's see the basic compressed sensing model:

y = A x + v.

Of course, providing the sensor devices have high quality, the noise vector v can be very small. However, the signal x (i.e. the recorded ECG signal before compression) has strong noise!!! Note that the application is telemonitoring via wireless body-area networks. Simply put, a device (run by battery) is put on your body to record various physiological data and then send these data (via blue-tooth) to your cell-phone, iphone, ipad, ect for advanced processing, and then these data are further sent to remote terminals for other use. In this application, you are free to walk around. Your each movement, even a very small movement, may result in large disturbance and noise in the recorded signal.

To get a basic feeling about this, I paste an ECG signal recorded from a pregnant women's abdomen, who quietly lies on a bed (not walks). So the major noise comes from her breathe. (I know generally ECG sensors are put on chest. This example is just to show the noise amplitude and how it changes the sparsity of the signal.) Let's see the raw ECG data:

Can you see the noise from her breathe? Is the signal sparse or compressible? You may use some threshold to remove the noise, but you can lost some important components of the ECG signal (e.g. P wave, T wave, etc). Also, the threshold should be data-adaptive. Since different people have ECG with different amplitudes, and the contact quality of sensor to skin also affects the signal amplitude, you need some algorithms to adaptively choose a suitable threshold. And the threshold algorithm also can increase the complexity of chip design and power consuming, which make this application of compressed sensing impossible. Note that the women was quietly lying on the bed. In the real application of body-area networks, the noise from arm movement, walk, or even run is extremely larger than this.

So, I strongly suggest that future work in this topic should seriously consider the noise from movement, and should derive "super" compressed sensing algorithms for this application. And the use of the MIT-BIH dataset (has been used in many existing papers) is thus not suitable. In one of my papers in preparation, I tried many famous algorithms and all of them failed. A main reason is the field of compressed sensing is lack of algorithms considering the noise from signal itself.

My blogs reporting quantitative financial analysis, artificial intelligence for stock investment & trading, and latest progress in signal processing and machine learning

Monday, September 19, 2011

Sunday, September 11, 2011

Erroneous analyses widely exist in neuroscience (and beyond)

Today Neuroskeptic posted a new blog entry: "Neuroscience Fails Stats 101?", which introduced a recently published paper:

S.Nieuwenhuis, B.U.Forstmann, and E-J Wagenmakers, Erroneous analyses of interactions in neuroscience: a problem of significance, Nature Neuroscience, vol. 14, no. 9, 2011

The paper mainly discusses the significant tests. However, I'd to say, when people apply machine learning techniques to neuroscience data (e.g. EEG, fMRI), erroneous analyses (even logically wrong) also exist. Sometimes the erroneous analyses are not explicitly, but more harmful.

One example is the application of ICA on the EEG/MEG/fMRI data. A key assumption of ICA is the independence or uncorrelation of "sources". This assumption is obviously violated in these neuroscience data. But some people seem to be too brave when using ICA to do analysis.

I am not saying using ICA to analyze neuroscience data is wrong. My point is: people should be more careful when using it:

(1) First, you should deeply understand ICA. You need to read enough classical papers, or even carefully read a book (e.g. A.Hyvarinen's book: independent component analysis).

I saw some people only read one or two papers and then jumped to the "ICA-analysis" job. Due to the availability of various ICA toolboxes for neuroscience, some people even didn't read any paper, and even could not correctly write the basic ICA model (really!).

It's very dangerous. This is because ICA is a complicated model and unfortunately, neuroscience is a more complicated field (probably the most complicated field in science). In the world there is nobody that have exact knowledge on the "sources" of EEG/MEG/fMRI data. As a result, people don't know whether the ICA separation is successful. This is different to other fields, where people can easily know whether their ICA is successful. For example, when people use ICA to separate speech signals, they can listen the separated signals to know whether the ICA separation is successful or not. But in neuroscience, you CAN NOT. We still lacks of much knowledge on these "sources" of EEG/MEG/fMRI data. This requires the analyzers to deeply understand the mathematical tools they are using: the sensitivity, the robustness, the all kinds of possibility of failure, etc.

It has been observed that ICA can split a signal emitted from an active brain area into two or more "independent sources". It has been observed that ICA only provides a temporal-averaged spatial distribution. It has also been observed that ICA fails when several brain activity are coupled. However, all these warnings are ignored by those brave people.

(2) Be careful when using two or more advanced machine learning analysis (e.g. ICA separation in a domain and then ICA separation in another domain, ICA followed by another exploring data analysis, etc). Due to the inconsistency of ICA models and neuroscience data, errors always exist. However, we don't have any knowledge on the errors from ICA. So, the errors from ICA is unpredictable, and such errors can also be unpredictably amplified when we use another advanced machine learning algorithm after ICA. The same goes to the use of other advanced algorithms successively.

In summary, ICA is a tiger, and to control it, the controller needs to be very skilled; otherwise, the controller will be seriously harmed by it.

---------------------------------------------------------------------------------------------

Nepenthes. x dyeriana.

This nepenthes was gaven by my friend, Bob, as a gift. It is a rare hybrid. Photo was taken by my friend Luo.

S.Nieuwenhuis, B.U.Forstmann, and E-J Wagenmakers, Erroneous analyses of interactions in neuroscience: a problem of significance, Nature Neuroscience, vol. 14, no. 9, 2011

The paper mainly discusses the significant tests. However, I'd to say, when people apply machine learning techniques to neuroscience data (e.g. EEG, fMRI), erroneous analyses (even logically wrong) also exist. Sometimes the erroneous analyses are not explicitly, but more harmful.

One example is the application of ICA on the EEG/MEG/fMRI data. A key assumption of ICA is the independence or uncorrelation of "sources". This assumption is obviously violated in these neuroscience data. But some people seem to be too brave when using ICA to do analysis.

I am not saying using ICA to analyze neuroscience data is wrong. My point is: people should be more careful when using it:

(1) First, you should deeply understand ICA. You need to read enough classical papers, or even carefully read a book (e.g. A.Hyvarinen's book: independent component analysis).

I saw some people only read one or two papers and then jumped to the "ICA-analysis" job. Due to the availability of various ICA toolboxes for neuroscience, some people even didn't read any paper, and even could not correctly write the basic ICA model (really!).

It's very dangerous. This is because ICA is a complicated model and unfortunately, neuroscience is a more complicated field (probably the most complicated field in science). In the world there is nobody that have exact knowledge on the "sources" of EEG/MEG/fMRI data. As a result, people don't know whether the ICA separation is successful. This is different to other fields, where people can easily know whether their ICA is successful. For example, when people use ICA to separate speech signals, they can listen the separated signals to know whether the ICA separation is successful or not. But in neuroscience, you CAN NOT. We still lacks of much knowledge on these "sources" of EEG/MEG/fMRI data. This requires the analyzers to deeply understand the mathematical tools they are using: the sensitivity, the robustness, the all kinds of possibility of failure, etc.

It has been observed that ICA can split a signal emitted from an active brain area into two or more "independent sources". It has been observed that ICA only provides a temporal-averaged spatial distribution. It has also been observed that ICA fails when several brain activity are coupled. However, all these warnings are ignored by those brave people.

(2) Be careful when using two or more advanced machine learning analysis (e.g. ICA separation in a domain and then ICA separation in another domain, ICA followed by another exploring data analysis, etc). Due to the inconsistency of ICA models and neuroscience data, errors always exist. However, we don't have any knowledge on the errors from ICA. So, the errors from ICA is unpredictable, and such errors can also be unpredictably amplified when we use another advanced machine learning algorithm after ICA. The same goes to the use of other advanced algorithms successively.

In summary, ICA is a tiger, and to control it, the controller needs to be very skilled; otherwise, the controller will be seriously harmed by it.

---------------------------------------------------------------------------------------------

Nepenthes. x dyeriana.

This nepenthes was gaven by my friend, Bob, as a gift. It is a rare hybrid. Photo was taken by my friend Luo.

Bayesian Group Lasso Using Non-MCMC?

Recently I read several papers on Bayesian group Lasso. A common characteristics of these works is that they adopt the MCMC approach for inference. Due to MCMC, these algorithms unfortunately perform very very slowly. I am wondering whether there exists a Bayesian group Lasso without the aid of MCMC?

Thursday, September 1, 2011

2011 Impact Factor of Journals

The newest JCR report has come out in June. The following are some journals in my research scope. Of course, impact factors do not reflect all the things of a paper; a paper published in a journal with high impact factor does not mean it is better than a paper published in another journal with lower impact factor. So, just for fun.

NeuroImage (Impact Factor: 5.932)

Signal Processing:

IEEE Signal Processing Magazine (Impact Factor: 5.86)

IEEE Transactions on Signal Processing (TSP) (Impact Factor: 2.651)

IEEE Journal of Selected Topics in Signal Processing (J-STSP) (Impact Factor: 2.647)

Elsevier Signal Processing (Impact Factor: 1.351)

IEEE Signal Processing Letters (Impact Factor: 1.165)

EURASIP Journal on Advances in Signal Processing (EURASIP JASP) (Impact Factor: 1.012)

Biomedical Signal Processing:

NeuroImage (Impact Factor: 5.932)

Human Brain Mapping (Impact Factor: 5.107)

IEEE Transactions on Medical Imaging (Impact Factor: 3.545)

IEEE Transactions on Neural Systems and Rehabilitation Engineering (Impact Factor: 2.182)

Journal of Neuroscience Method (Impact Factor: 2.1)

IEEE Transactions on Biomedical Engineering (Impact Factor: 1.782)

---------------------------------------------------------------

Nepenthes. jamban

The following picture has won the first prize in POTM Contest in July. It is my first time to win it :)

Wednesday, August 24, 2011

How To Choose a Good Scientific Problem

When I did experiments, I always like to read some easy papers, such as review, survey, or some academic stuff. Tonight (or, in fact, this early morning) I read an interesting paper:

Uri Alon, How to choose a good scientific problem, Molecular Cell 35, 2009.

Abstract: Choosing good problems is essential for being a good scientist. But what is a good problem, and how do you choose one? The subject is not usually discussed explicitly within our profession. Scientists are expected to be smart enough to figure it out on their own and through the observation of their teachers. This lack of explicit discussion leaves a vacuum that can lead to approaches such as choosing problems that can give results that merit publication in valued journals, resulting in a job and tenure.

This paper gives several suggestions to both the students/post-docs and the mentors (especially those young assistant professors, who start to build their labs). Although the paper was written for people in the biology field, it is helpful to people in any fields.

There are several good suggestions for students and young professors. I pick up three of them:

(1) Thinking over a topic for enough time (e.g. 3 months) before starting to do it. Fully consider the feasibility and the interests of the topic.

(2) Listen to inner voice, not the voice of those who are around you or around the conferences. Namely, choose the topic that you are really interested in, not the one others are interested in.

(3) A research road is not a straight line from the beginning to the destination. There are many loops and circles (the author called it 'cloud') between your beginning and the destination (as shown in the figure). And most probably, your destination is not the original destination; you find another more interesting problem and start to solve it.

Uri Alon, How to choose a good scientific problem, Molecular Cell 35, 2009.

Abstract: Choosing good problems is essential for being a good scientist. But what is a good problem, and how do you choose one? The subject is not usually discussed explicitly within our profession. Scientists are expected to be smart enough to figure it out on their own and through the observation of their teachers. This lack of explicit discussion leaves a vacuum that can lead to approaches such as choosing problems that can give results that merit publication in valued journals, resulting in a job and tenure.

This paper gives several suggestions to both the students/post-docs and the mentors (especially those young assistant professors, who start to build their labs). Although the paper was written for people in the biology field, it is helpful to people in any fields.

There are several good suggestions for students and young professors. I pick up three of them:

(1) Thinking over a topic for enough time (e.g. 3 months) before starting to do it. Fully consider the feasibility and the interests of the topic.

(2) Listen to inner voice, not the voice of those who are around you or around the conferences. Namely, choose the topic that you are really interested in, not the one others are interested in.

(3) A research road is not a straight line from the beginning to the destination. There are many loops and circles (the author called it 'cloud') between your beginning and the destination (as shown in the figure). And most probably, your destination is not the original destination; you find another more interesting problem and start to solve it.

Friday, August 19, 2011

IBM Unveils Cognitive Computing Chips

Continuing the discussion in here, Hasan Al Marzouqi sent me a news from IBM, which arouses me strong interests. Here is it (http://www-03.ibm.com/press/us/en/pressrelease/35251.wss):

ARMONK, N.Y., - 18 Aug 2011: Today, IBM (NYSE: IBM) researchers unveiled a new generation of experimental computer chips designed to emulate the brain’s abilities for perception, action and cognition. The technology could yield many orders of magnitude less power consumption and space than used in today’s computers.

In a sharp departure from traditional concepts in designing and building computers, IBM’s first neurosynaptic computing chips recreate the phenomena between spiking neurons and synapses in biological systems, such as the brain, through advanced algorithms and silicon circuitry. Its first two prototype chips have already been fabricated and are currently undergoing testing.

Called cognitive computers, systems built with these chips won’t be programmed the same way traditional computers are today. Rather, cognitive computers are expected to learn through experiences, find correlations, create hypotheses, and remember – and learn from – the outcomes, mimicking the brains structural and synaptic plasticity.

To do this, IBM is combining principles from nanoscience, neuroscience and supercomputing as part of a multi-year cognitive computing initiative. The company and its university collaborators also announced they have been awarded approximately $21 million in new funding from the Defense Advanced Research Projects Agency (DARPA) for Phase 2 of the Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) project.

The goal of SyNAPSE is to create a system that not only analyzes complex information from multiple sensory modalities at once, but also dynamically rewires itself as it interacts with its environment – all while rivaling the brain’s compact size and low power usage. The IBM team has already successfully completed Phases 0 and 1.

“This is a major initiative to move beyond the von Neumann paradigm that has been ruling computer architecture for more than half a century,” said Dharmendra Modha, project leader for IBM Research. “Future applications of computing will increasingly demand functionality that is not efficiently delivered by the traditional architecture. These chips are another significant step in the evolution of computers from calculators to learning systems, signaling the beginning of a new generation of computers and their applications in business, science and government.”

Neurosynaptic Chips

While they contain no biological elements, IBM’s first cognitive computing prototype chips use digital silicon circuits inspired by neurobiology to make up what is referred to as a “neurosynaptic core” with integrated memory (replicated synapses), computation (replicated neurons) and communication (replicated axons).

IBM has two working prototype designs. Both cores were fabricated in 45 nm SOI-CMOS and contain 256 neurons. One core contains 262,144 programmable synapses and the other contains 65,536 learning synapses. The IBM team has successfully demonstrated simple applications like navigation, machine vision, pattern recognition, associative memory and classification.

IBM’s overarching cognitive computing architecture is an on-chip network of light-weight cores, creating a single integrated system of hardware and software. This architecture represents a critical shift away from traditional von Neumann computing to a potentially more power-efficient architecture that has no set programming, integrates memory with processor, and mimics the brain’s event-driven, distributed and parallel processing.

IBM’s long-term goal is to build a chip system with ten billion neurons and hundred trillion synapses, while consuming merely one kilowatt of power and occupying less than two liters of volume.

Why Cognitive Computing

Future chips will be able to ingest information from complex, real-world environments through multiple sensory modes and act through multiple motor modes in a coordinated, context-dependent manner.

For example, a cognitive computing system monitoring the world's water supply could contain a network of sensors and actuators that constantly record and report metrics such as temperature, pressure, wave height, acoustics and ocean tide, and issue tsunami warnings based on its decision making. Similarly, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag bad or contaminated produce. Making sense of real-time input flowing at an ever-dizzying rate would be a Herculean task for today’s computers, but would be natural for a brain-inspired system.

“Imagine traffic lights that can integrate sights, sounds and smells and flag unsafe intersections before disaster happens or imagine cognitive co-processors that turn servers, laptops, tablets, and phones into machines that can interact better with their environments,” said Dr. Modha.

For Phase 2 of SyNAPSE, IBM has assembled a world-class multi-dimensional team of researchers and collaborators to achieve these ambitious goals. The team includes Columbia University; Cornell University; University of California, Merced; and University of Wisconsin, Madison.

IBM has a rich history in the area of artificial intelligence research going all the way back to 1956 when IBM performed the world's first large-scale (512 neuron) cortical simulation. Most recently, IBM Research scientists created Watson, an analytical computing system that specializes in understanding natural human language and provides specific answers to complex questions at rapid speeds. Watson represents a tremendous breakthrough in computers understanding natural language, “real language” that is not specially designed or encoded just for computers, but language that humans use to naturally capture and communicate knowledge.

IBM’s cognitive computing chips were built at its highly advanced chip-making facility in Fishkill, N.Y. and are currently being tested at its research labs in Yorktown Heights, N.Y. and San Jose, Calif.

For more information about IBM Research, please visit ibm.com/research.

ARMONK, N.Y., - 18 Aug 2011: Today, IBM (NYSE: IBM) researchers unveiled a new generation of experimental computer chips designed to emulate the brain’s abilities for perception, action and cognition. The technology could yield many orders of magnitude less power consumption and space than used in today’s computers.

In a sharp departure from traditional concepts in designing and building computers, IBM’s first neurosynaptic computing chips recreate the phenomena between spiking neurons and synapses in biological systems, such as the brain, through advanced algorithms and silicon circuitry. Its first two prototype chips have already been fabricated and are currently undergoing testing.

Called cognitive computers, systems built with these chips won’t be programmed the same way traditional computers are today. Rather, cognitive computers are expected to learn through experiences, find correlations, create hypotheses, and remember – and learn from – the outcomes, mimicking the brains structural and synaptic plasticity.

To do this, IBM is combining principles from nanoscience, neuroscience and supercomputing as part of a multi-year cognitive computing initiative. The company and its university collaborators also announced they have been awarded approximately $21 million in new funding from the Defense Advanced Research Projects Agency (DARPA) for Phase 2 of the Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) project.

The goal of SyNAPSE is to create a system that not only analyzes complex information from multiple sensory modalities at once, but also dynamically rewires itself as it interacts with its environment – all while rivaling the brain’s compact size and low power usage. The IBM team has already successfully completed Phases 0 and 1.

“This is a major initiative to move beyond the von Neumann paradigm that has been ruling computer architecture for more than half a century,” said Dharmendra Modha, project leader for IBM Research. “Future applications of computing will increasingly demand functionality that is not efficiently delivered by the traditional architecture. These chips are another significant step in the evolution of computers from calculators to learning systems, signaling the beginning of a new generation of computers and their applications in business, science and government.”

Neurosynaptic Chips

While they contain no biological elements, IBM’s first cognitive computing prototype chips use digital silicon circuits inspired by neurobiology to make up what is referred to as a “neurosynaptic core” with integrated memory (replicated synapses), computation (replicated neurons) and communication (replicated axons).

IBM has two working prototype designs. Both cores were fabricated in 45 nm SOI-CMOS and contain 256 neurons. One core contains 262,144 programmable synapses and the other contains 65,536 learning synapses. The IBM team has successfully demonstrated simple applications like navigation, machine vision, pattern recognition, associative memory and classification.

IBM’s overarching cognitive computing architecture is an on-chip network of light-weight cores, creating a single integrated system of hardware and software. This architecture represents a critical shift away from traditional von Neumann computing to a potentially more power-efficient architecture that has no set programming, integrates memory with processor, and mimics the brain’s event-driven, distributed and parallel processing.

IBM’s long-term goal is to build a chip system with ten billion neurons and hundred trillion synapses, while consuming merely one kilowatt of power and occupying less than two liters of volume.

Why Cognitive Computing

Future chips will be able to ingest information from complex, real-world environments through multiple sensory modes and act through multiple motor modes in a coordinated, context-dependent manner.

For example, a cognitive computing system monitoring the world's water supply could contain a network of sensors and actuators that constantly record and report metrics such as temperature, pressure, wave height, acoustics and ocean tide, and issue tsunami warnings based on its decision making. Similarly, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag bad or contaminated produce. Making sense of real-time input flowing at an ever-dizzying rate would be a Herculean task for today’s computers, but would be natural for a brain-inspired system.

“Imagine traffic lights that can integrate sights, sounds and smells and flag unsafe intersections before disaster happens or imagine cognitive co-processors that turn servers, laptops, tablets, and phones into machines that can interact better with their environments,” said Dr. Modha.

For Phase 2 of SyNAPSE, IBM has assembled a world-class multi-dimensional team of researchers and collaborators to achieve these ambitious goals. The team includes Columbia University; Cornell University; University of California, Merced; and University of Wisconsin, Madison.

IBM has a rich history in the area of artificial intelligence research going all the way back to 1956 when IBM performed the world's first large-scale (512 neuron) cortical simulation. Most recently, IBM Research scientists created Watson, an analytical computing system that specializes in understanding natural human language and provides specific answers to complex questions at rapid speeds. Watson represents a tremendous breakthrough in computers understanding natural language, “real language” that is not specially designed or encoded just for computers, but language that humans use to naturally capture and communicate knowledge.

IBM’s cognitive computing chips were built at its highly advanced chip-making facility in Fishkill, N.Y. and are currently being tested at its research labs in Yorktown Heights, N.Y. and San Jose, Calif.

For more information about IBM Research, please visit ibm.com/research.

Wednesday, August 17, 2011

Look for more compressed sensing algorithms for cluster-structured sparse signals

I am now deriving some algorithms for cluster-structured sparse signals (and block-sparse signals). I plan to do some experiments, comparing mine with existing algorithms. Generally, my algorithms do not need any information about the cluster size, cluster number, cluster partition, etc. So, my algorithms can be used to compare most, if not all, existing algorithms. However, currently, I only compared those classic algorithms, such as group Lasso, overlap group Lasso, DGS, BCS-MCMC, block OMP (and its variants -- I don't know why, these OMP algorithms are very poor, especially in noisy cases). Although there are branch of papers proposed state-of-the-art algorithms, their codes are not available online. If you, my dear readers, happen to know some good algorithms (and their codes are available online), please let me know. Thank you.

Friday, August 5, 2011

The most beautiful picture

I know this is an academic blog. But forgive me. I want to post this picture to share my greatest happiness with all of you.

Monday, July 25, 2011

Probably the first paper on multiple measurement vector (MMV) model

I uploaded the workshop paper by Prof. B.D.Rao and Prof. K.Kreutz-Delgado, which was presented in the 8th IEEE Digital Signal Processing Workshop, Bryce Canyon, UT, 1998. The workshop paper can be downloaded here.

Probably this is the first paper on the multiple measurement vector (MMV) model. As you can see, the MMV versions of FOCUSS, Matching Pursuit, Order Recursive Matching Pursuit, and Modified Matching Pursuit were all presented in this paper. These contents were fully discussed and extended in their journal paper under the same title (Sparse solutions to linear inverse problems with multiple measurement vectors). But unfortunately, the journal paper was published seven years later!!!

Probably this is the first paper on the multiple measurement vector (MMV) model. As you can see, the MMV versions of FOCUSS, Matching Pursuit, Order Recursive Matching Pursuit, and Modified Matching Pursuit were all presented in this paper. These contents were fully discussed and extended in their journal paper under the same title (Sparse solutions to linear inverse problems with multiple measurement vectors). But unfortunately, the journal paper was published seven years later!!!

Thursday, July 21, 2011

Academic Software Applications for Electromagnetic Brain Mapping Using MEG and EEG

There is a special issue of Computational Intelligence and Neuroscience, coedited by Sylvain Baillet, Karl Friston and Robert Oostenveld, on Academic Software Applications for Electromagnetic Brain Mapping Using MEG and EEG. They are available at: http://www.hindawi.com/journals/cin/2011/si.1/

The following is the content. You will see many famous softwares are discussed in this special issue.

The following is the content. You will see many famous softwares are discussed in this special issue.

Academic Software Applications for Electromagnetic Brain Mapping Using MEG and EEG, Sylvain Baillet, Karl Friston, and Robert Oostenveld

Volume 2011 (2011), Article ID 972050, 4 pages

Brainstorm: A User-Friendly Application for MEG/EEG Analysis, François Tadel, Sylvain Baillet, John C. Mosher, Dimitrios Pantazis, and Richard M. Leahy

Volume 2011 (2011), Article ID 879716, 13 pages

Spatiotemporal Analysis of Multichannel EEG: CARTOOL, Denis Brunet, Micah M. Murray, and Christoph M. Michel

Volume 2011 (2011), Article ID 813870, 15 pages

EEGLAB, SIFT, NFT, BCILAB, and ERICA: New Tools for Advanced EEG Processing, Arnaud Delorme, Tim Mullen, Christian Kothe, Zeynep Akalin Acar, Nima Bigdely-Shamlo, Andrey Vankov, and Scott Makeig

Volume 2011 (2011), Article ID 130714, 12 pages

ELAN: A Software Package for Analysis and Visualization of MEG, EEG, and LFP Signals, Pierre-Emmanuel Aguera, Karim Jerbi, Anne Caclin, and Olivier Bertrand

Volume 2011 (2011), Article ID 158970, 11 pages

ElectroMagnetoEncephalography Software: Overview and Integration with Other EEG/MEG Toolboxes, Peter Peyk, Andrea De Cesarei, and Markus Junghöfer

Volume 2011 (2011), Article ID 861705, 10 pages

FieldTrip: Open Source Software for Advanced Analysis of MEG, EEG, and Invasive Electrophysiological Data, Robert Oostenveld, Pascal Fries, Eric Maris, and Jan-Mathijs Schoffelen

Volume 2011 (2011), Article ID 156869, 9 pages

MEG/EEG Source Reconstruction, Statistical Evaluation, and Visualization with NUTMEG, Sarang S. Dalal, Johanna M. Zumer, Adrian G. Guggisberg, Michael Trumpis, Daniel D. E. Wong, Kensuke Sekihara, and Srikantan S. Nagarajan

Volume 2011 (2011), Article ID 758973, 17 pages

EEG and MEG Data Analysis in SPM8, Vladimir Litvak, Jérémie Mattout, Stefan Kiebel, Christophe Phillips, Richard Henson, James Kilner, Gareth Barnes, Robert Oostenveld, Jean Daunizeau, Guillaume Flandin, Will Penny, and Karl Friston

Volume 2011 (2011), Article ID 852961, 32 pages

EEGIFT: Group Independent Component Analysis for Event-Related EEG Data, Tom Eichele, Srinivas Rachakonda, Brage Brakedal, Rune Eikeland, and Vince D. Calhoun

Volume 2011 (2011), Article ID 129365, 9 pages

LIMO EEG: A Toolbox for Hierarchical LInear MOdeling of ElectroEncephaloGraphic Data, Cyril R. Pernet, Nicolas Chauveau, Carl Gaspar, and Guillaume A. Rousselet

Volume 2011 (2011), Article ID 831409, 11 pages

Ragu: A Free Tool for the Analysis of EEG and MEG Event-Related Scalp Field Data Using Global Randomization Statistics, Thomas Koenig, Mara Kottlow, Maria Stein, and Lester Melie-García

Volume 2011 (2011), Article ID 938925, 14 pages

BioSig: The Free and Open Source Software Library for Biomedical Signal Processing, Carmen Vidaurre, Tilmann H. Sander, and Alois Schlögl

Volume 2011 (2011), Article ID 935364, 12 pages

Craniux: A LabVIEW-Based Modular Software Framework for Brain-Machine Interface Research, Alan D. Degenhart, John W. Kelly, Robin C. Ashmore, Jennifer L. Collinger, Elizabeth C. Tyler-Kabara, Douglas J. Weber, and Wei Wang

Volume 2011 (2011), Article ID 363565, 13 pages

rtMEG: A Real-Time Software Interface for

Magnetoencephalography, Gustavo Sudre, Lauri Parkkonen, Elizabeth Bock, Sylvain Baillet, Wei Wang, and Douglas J. Weber

Volume 2011 (2011), Article ID 327953, 7 pages

BrainNetVis: An Open-Access Tool to Effectively Quantify and Visualize Brain Networks, Eleni G. Christodoulou, Vangelis Sakkalis, Vassilis Tsiaras, and Ioannis G. Tollis

Volume 2011 (2011), Article ID 747290, 12 pages

fMRI Artefact Rejection and Sleep Scoring Toolbox, Yves Leclercq, Jessica Schrouff, Quentin Noirhomme, Pierre Maquet, and Christophe Phillips

Volume 2011 (2011), Article ID 598206, 11 pages

Highly Automated Dipole EStimation (HADES), C. Campi, A. Pascarella, A. Sorrentino, and M. Piana

Volume 2011 (2011), Article ID 982185, 11 pages

Forward Field Computation with OpenMEEG, Alexandre Gramfort, Théodore Papadopoulo, Emmanuel Olivi, and Maureen Clerc

Volume 2011 (2011), Article ID 923703, 13 pages

PyEEG: An Open Source Python Module for EEG/MEG Feature Extraction, Forrest Sheng Bao, Xin Liu, and Christina Zhang

Volume 2011 (2011), Article ID 406391, 7 pages

TopoToolbox: Using Sensor Topography to Calculate Psychologically Meaningful Measures from Event-Related EEG/MEG, Xing Tian, David Poeppel, and David E. Huber

Volume 2011 (2011), Article ID 674605, 8 pages

Thursday, July 7, 2011

When Bayes Meets Big Data

In the June Issue of The ISBA Bulletin, Michael Jordan wrote an article titled "The Era of Big Data". The article discussed the possibility and challenges to apply Bayesian techniques to Big Data (e.g. terabytes, petabytes, exabytes and zettabytes). Michael pointed out several advantages of Bayes over non-Bayes, which I quote here:

(1) Analyses of Big Data often have an exploratory flavor rather than a confirmatory flavor. Some of the concerns over family-wise error rates that bedevil classical approaches to exploratory data analysis are mitigated in the Bayesian framework.

(2) In the sciences, Big Data problems often arise in the context of “standard models,” which are often already formulated in probabilistic terms. That is, significant prior knowledge is often present and directly amenable to Bayesian inference.

(3) Consider a company wishing to offer personalized services to tens of millions of users. Large amounts of data will have been collected for some users, but for most users there will be little or no data. Such situations cry out for Bayesian hierarchical modeling.

(4) The growing field of Bayesian nonparametrics provides tools for dealing with situations in which phenomena continue to emerge as data are collected. For example, Bayesian nonparametrics not only provides probability models that yield power-law distributions, but it provides inferential machinery that incorporate these distributions.

Based on my experience on compressed sensing, I feel that Bayes provides a more flexible way to exploit structured sparsity. Such power gained from Bayes cannot be gained from non-Bayes methods. However, Bayes is computationally demanding. So, combining Bayes and non-Bayes is my research theme in compressed sensing. This is why I wrote the two papers:

Z.Zhang, B.D.Rao, Iterative Reweighted Algorithms for Sparse Signal Recovery with Temporally Correlated Source Vectors, ICASSP 2011

Z. Zhang, B.D.Rao, Exploiting Correlation in Sparse Signal Recovery Problems: Multiple Measurement Vectors, Block Sparsity, and Time-Varying Sparsity, ICML 2011 Workshop on Structured Sparsity

Above Pictures: Nepenthes. jamban (growing in my patio)

This rare species was discovered in the island of Sumatra in Indonesian in 2005. The pitchers have a unique toilet shape, so the plant was affectionately called jamban, which means toilet in Indonesian.

(1) Analyses of Big Data often have an exploratory flavor rather than a confirmatory flavor. Some of the concerns over family-wise error rates that bedevil classical approaches to exploratory data analysis are mitigated in the Bayesian framework.

(2) In the sciences, Big Data problems often arise in the context of “standard models,” which are often already formulated in probabilistic terms. That is, significant prior knowledge is often present and directly amenable to Bayesian inference.

(3) Consider a company wishing to offer personalized services to tens of millions of users. Large amounts of data will have been collected for some users, but for most users there will be little or no data. Such situations cry out for Bayesian hierarchical modeling.

(4) The growing field of Bayesian nonparametrics provides tools for dealing with situations in which phenomena continue to emerge as data are collected. For example, Bayesian nonparametrics not only provides probability models that yield power-law distributions, but it provides inferential machinery that incorporate these distributions.

Based on my experience on compressed sensing, I feel that Bayes provides a more flexible way to exploit structured sparsity. Such power gained from Bayes cannot be gained from non-Bayes methods. However, Bayes is computationally demanding. So, combining Bayes and non-Bayes is my research theme in compressed sensing. This is why I wrote the two papers:

Z.Zhang, B.D.Rao, Iterative Reweighted Algorithms for Sparse Signal Recovery with Temporally Correlated Source Vectors, ICASSP 2011

Z. Zhang, B.D.Rao, Exploiting Correlation in Sparse Signal Recovery Problems: Multiple Measurement Vectors, Block Sparsity, and Time-Varying Sparsity, ICML 2011 Workshop on Structured Sparsity

Above Pictures: Nepenthes. jamban (growing in my patio)

This rare species was discovered in the island of Sumatra in Indonesian in 2005. The pitchers have a unique toilet shape, so the plant was affectionately called jamban, which means toilet in Indonesian.

Tuesday, June 28, 2011

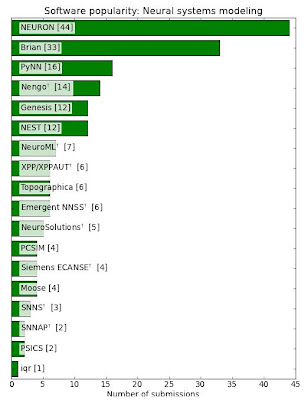

Neuroscience software survey: What is popular, what has problems?

There is an excellent survey on Neuroscience software, carried out by Yaroslav Halchenko and his colleague. Here is the result link: http://neuro.debian.net/survey/2011/results.html

The primary analyses have been published in: Hanke, M. & Halchenko, Y. O. (2011). Neuroscience runs on GNU/Linux. Frontiers in Neuroinformatics, 5:8.

I picked several figures from their result according to my research interests:

The primary analyses have been published in: Hanke, M. & Halchenko, Y. O. (2011). Neuroscience runs on GNU/Linux. Frontiers in Neuroinformatics, 5:8.

I picked several figures from their result according to my research interests:

Thursday, June 23, 2011

ICAtoolbox 3.8 is available now for download

Previously, in my homepage I only provided the content file of the toolbox, and I promised that once I translated the code descriptions into English, I would release it. However, since 2009 when I switched my interest to sparse signal recovery/compressed sensing, I had no time to do the translation job. So I decide to release it now and I apologize for some codes with descriptions/comments written in Chinese. However, if some body has questions, feel free to contact me.

The toolbox can be found in my software page: http://dsp.ucsd.edu/~zhilin/Software.html

PS: Please note that there may be several algorithm codes written by other people. The authors' names are written in the code descriptions.

The toolbox can be found in my software page: http://dsp.ucsd.edu/~zhilin/Software.html

PS: Please note that there may be several algorithm codes written by other people. The authors' names are written in the code descriptions.

You can call me "Zorro" instead of "Zhilin" if you like

During my study in US, I find many people don't know how to pronounce my first name "Zhilin", or have difficulty to remember my name. So I decide to give myself a nickname such that people can easily remember or pronounce it. And I choose "Zorro" as my nickname, since I very like Zorro during my childhood and my wife said that I have several obvious characteristics in common with Zorro (except that I don't know how to fight :) ).

Open problems in Bayesian statistics

Mike Jordan wrote a report on his interesting survey on 50 statisticians by asking them what are the open problems in Bayesian statistics. Here is his report: http://members.bayesian.org/sites/default/files/fm/bulletins/1103.pdf

The top open problems in his report are as follows:

No.1. Model selection and hypothesis testing.

No.2. Computation and statistics.

No.3. Bayesian/frequentist relationships

No.4. Priors

No.5. Nonparametrics and semiparametrics

Andew Gelman made excellent comments on these problems. Here is the link: http://statisticsforum.wordpress.com/2011/04/28/what-are-the-open-problems-in-bayesian-statistics/

The top open problems in his report are as follows:

No.1. Model selection and hypothesis testing.

No.2. Computation and statistics.

No.3. Bayesian/frequentist relationships

No.4. Priors

No.5. Nonparametrics and semiparametrics

Andew Gelman made excellent comments on these problems. Here is the link: http://statisticsforum.wordpress.com/2011/04/28/what-are-the-open-problems-in-bayesian-statistics/

Subscribe to:

Posts (Atom)